Go: Performance impact of channel buffers

Channels are one of the most fundamental constructs in Go.

They have many different uses: generally, communicating values between goroutines, but also easily implementing other common constructs such as futures, semaphores, or very simple synchronization primitives, such as the traditional done channel.

To buffer or not to buffer

Channels can be unbuffered, or they can have a buffer with a given size. Some use cases necessitate an unbuffered channel, while others require a buffer of a specific size. But there are also cases where using a buffer or not doesn’t really change the overall behavior, such as when using channels to pass data between goroutines that don’t need any fancy synchronization, i.e. the typical pipeline:

func main() {

pipeline := make(chan int)

go func() {

for i := 0; i < 10; i++ {

pipeline <- i * i

}

close(pipeline)

}()

for i := range pipeline {

fmt.Println(i)

}

}

In that case, replacing the unbuffered pipeline := make(chan int) by a buffered pipeline := make(chan int, 42) wouldn’t change the output of the program: try it.

However, while it wouldn’t change the output of the program, it can very well change its performance.

Performance impact

What happens with an unbuffered channel is that consumer and producer goroutines are blocking each other, meaning they must run one after the other for every single message, and this context-switch takes some time. On the other hand, by using a buffered channel, the producer can put many messages in the buffer at once, and then after a single context-switch, the consumer can read many messages as well, allowing for a better throughput.

Let’s benchmark.

package main

import (

"sync"

"testing"

)

func BenchmarkUnbuffered(b *testing.B) { bench(b, 0) }

func BenchmarkBuffer1(b *testing.B) { bench(b, 1) }

func BenchmarkBuffer3(b *testing.B) { bench(b, 3) }

func BenchmarkBuffer10(b *testing.B) { bench(b, 10) }

func BenchmarkBuffer30(b *testing.B) { bench(b, 30) }

func BenchmarkBuffer100(b *testing.B) { bench(b, 100) }

func BenchmarkBuffer300(b *testing.B) { bench(b, 300) }

func BenchmarkBuffer1000(b *testing.B) { bench(b, 1000) }

func BenchmarkBuffer3000(b *testing.B) { bench(b, 3000) }

func BenchmarkBuffer10000(b *testing.B) { bench(b, 10000) }

func BenchmarkBuffer30000(b *testing.B) { bench(b, 30000) }

func BenchmarkBuffer100000(b *testing.B) { bench(b, 100000) }

func bench(b *testing.B, bufSize int) {

c := make(chan int, bufSize)

var wg sync.WaitGroup

wg.Add(1)

go func() {

for range c {

}

wg.Done()

}()

b.ResetTimer()

for n := 0; n < b.N; n++ {

c <- n

}

close(c)

wg.Wait()

}

$ go test -bench=.

goos: linux

goarch: amd64

pkg: bench_channels

cpu: AMD Ryzen 7 3700X 8-Core Processor

BenchmarkUnbuffered-16 6557785 263.5 ns/op

BenchmarkBuffer1-16 6488571 198.8 ns/op

BenchmarkBuffer3-16 8541388 148.9 ns/op

BenchmarkBuffer10-16 12418048 99.66 ns/op

BenchmarkBuffer30-16 15868773 70.58 ns/op

BenchmarkBuffer100-16 18275827 58.33 ns/op

BenchmarkBuffer300-16 18195315 64.29 ns/op

BenchmarkBuffer1000-16 16519131 69.07 ns/op

BenchmarkBuffer3000-16 23020892 52.69 ns/op

BenchmarkBuffer10000-16 28517798 47.87 ns/op

BenchmarkBuffer30000-16 22563667 49.93 ns/op

BenchmarkBuffer100000-16 24544315 46.84 ns/op

PASS

ok bench_channels 18.502s

Results

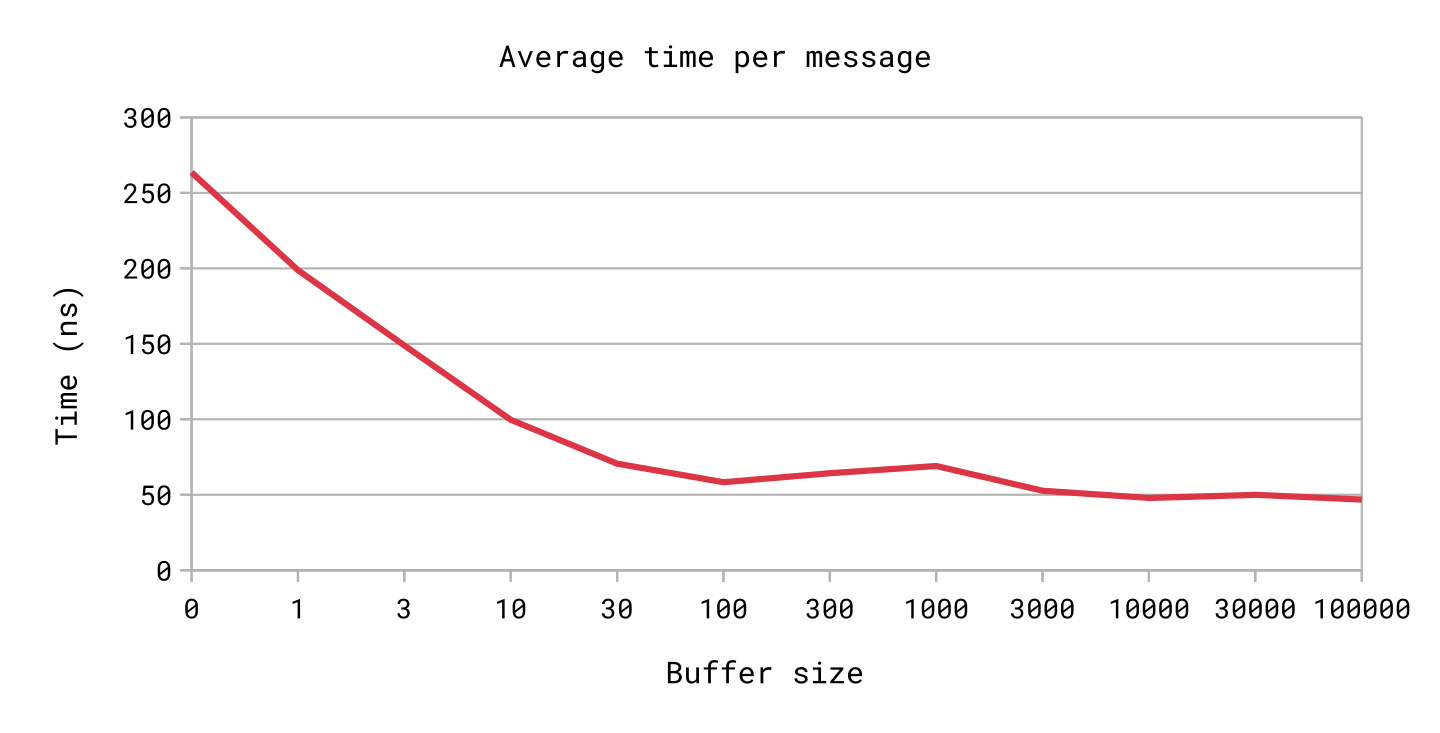

As is evident from the benchmark, even a buffer as small as 10 can make the channel 2.5 times more efficient over an unbuffered channel. A buffer of 100 makes it about 5 times more efficient.

Of course, channels are pretty fast to begin with, even the unbuffered channel can push a message in 250 ns. 0.00000025 s. This may look insignificant, and you might wonder if those performance improvements matter at all.

But keep in mind that some programs may have dozens of stages connected by channels, where each stage may be wrapped in helpers for filtering, batching, parallelizing or whatnot, each of those using their own channels… It’s easy to reach hundreds of channels that each message has to go through. And that program may be designed to process tens of thousands of messages per second. In the end, we could very well be talking about millions of channel sends and receives per second.

All of a sudden, that 250 ns send becomes 250 ms of channel operations per second. 1/4th of your processing time.

Conclusion

Those numbers will certainly be heavily influenced by your hardware, how many goroutines you’re using and how exactly they consume and produce messages, etc. They’re not to be quoted as-is. But the point stands: buffered channels are more efficient than unbuffered ones.

If your program makes heavy use of channels, and they’re not buffered even though they could be, I would suggest you try adding a 10 or 100-sized buffer. Measure the performance before and after, and see what you get. You might have a nice surprise!